WASP: Autonomous Cloud

Researchers: Karl-Erik Årzén, Martina Maggio, Johan Eker, Tommi Nylander, Per Skarin, Alexandre Martins, Viktor Millnert in collaboration with Maria Kihl at the Department of Electrical and Information Technology, with Erik Elmroth, Cristian Klein, and Chanh Nguyen at Umeå University, and with Amir Roozbeh and Dejan Kostic at KTH.

Funding: Knut and Alice Wallenberg Foundation through WASP (Wallenberg Autonomous Systems Program)

Time Period: 2016 - 2019

Background

An increasing amount of computing and information services are moving to the cloud, where they execute on virtualized hardware in private or public data centers. Hence, the cloud can be viewed as an underlying computing infrastructure for all systems of systems. The architectural complexity of the cloud is rapidly increasing. Modern data centers consist of tens of thousands of components, e.g., compute servers, storage servers, cache servers, routers, PDUs, UPSs, and air-conditioning units, with configuration and tuning parameters numbering in the hundreds of thousands. The same increasing trend holds for the operational complexity. The individual components are themselves increasingly difficult to maintain and operate. The strong connection between the components furthermore makes it necessary to tune the entire system, which is complicated by the fact that in many cases the behaviors, execution contexts, and interactions are not known a priori. The term autonomous computing or autonomic computing was coined by IBM in the beginning of the 2000s for self-managing computing systems with the focus on private enterprise IT systems. However, this approach is even more relevant for the cloud. The motivation is the current levels of scale, complexity, and dynamicity which make efficient human management infeasible. In the autonomous cloud control, AI, and machine learning/analytics techniques will be used to dynamically determine how applications should be best mapped onto the server network, how capacity should be automatically scaled when the load or the available resources vary, and how load should be balanced.

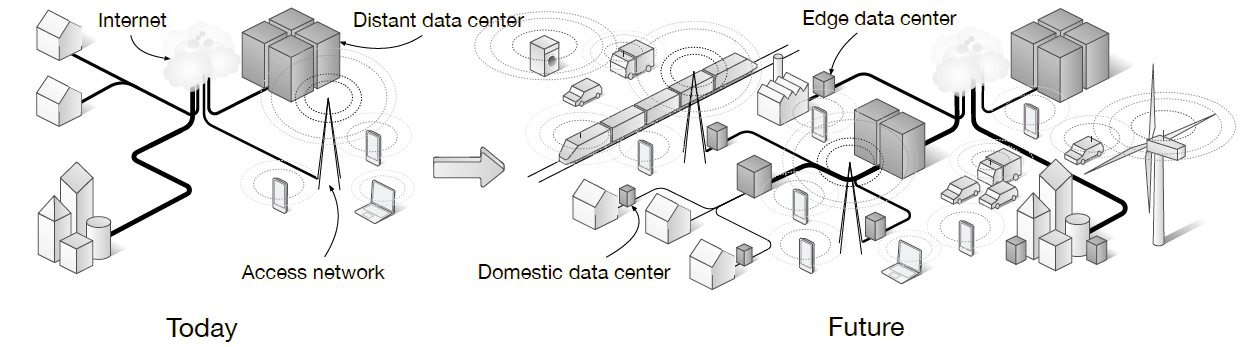

Currently there is also a growing interest in applying cloud techniques, such as virtualization and collocation, in the access telecommunication network itself. The unification of the telecom access network and the traditional cloud data centers, sometimes referred to as the distributed cloud, provide a single distributed computing platform. Here the boundary between the network and the data centers disappears, allowing application software to be dynamically deployed in all types of nodes, e.g., in base stations near end-users, in remote large-scale datacenters, or anywhere in between. In these systems the need for autonomous operation and resource management becomes even more urgent as heterogeneity increases, when some of the nodes may be mobile with varying availability, and when new 5G-based mission-critical applications with harder requirements on latency, uptime, and availability are migrated to the cloud.

The following figure illustrates how the computations in the distributed cloud are migrating from back-end data centers out in the network.

Project Outline

In the project distributed control and real-time analytics will be used to dynamically solve resource management problems in the distributed cloud. The management problem consists of deciding the types and quantities of resources that should be allocated to each application, and when and where to deploy them. This also includes dynamic decisions such as automatic scaling of the resource amount when the load or the available resources vary, and on-line migration of application components between nodes. Major scientific challenges include dynamic modeling of cloud infrastructure resources and workloads, how to best integrate real-time analytics techniques with model-based feedback mechanisms, scalable distributed control approaches for these types of applications and scalability aspects of distributed computing.

In order to develop efficient methods for resource management, it is crucial to understand the performance aspects of the infrastructure, what the workloads look like, and how they vary over time. Hence, Infrastructure modeling and Workload modeling for the distributed cloud are important topics. Due to user mobility and variations in usage and resource availability, applications using many instances are constantly subject to changes in the number of instances; the individual instances relocated or resized; the network capacity adjusted; etc. Capacity autoscaling is needed to determine how much capacity should be allocated for a complete application or any specific part of it; Dynamic component mapping to determine when, where, and how instances should be relocated, e.g., from a data center to a specific base station; and Optimized load mix management to determine how to “pack” different instances on individual servers or clusters. Since not all applications are equally important, e.g., due to differently priced service levels or due to some being critical to society (emergency, health care, etc.), the solutions to the three problems above must take into account Quality of Service differentiation. Finally, we address Holistic management to perform full-system coordination.

The primary software infrastructure will be based on Calvin, an open source application environment developed by Ericsson and aimed at distributed clouds for IoT services. Calvin is based upon on the well-established actor model, it scales well, and it supports live migration of application components. We believe this infrastructure is suitable to investigate the application performance behavior of future commercial systems and validate our developed management solutions. It will enable accurate estimations of, for example, application latency and system loads.

The project results have the potential to be demonstrated in several WASP demonstrator arenas, including the Autonomous Research Arena (ARA), the Ericsson Research Data Center (ERDC); as well as in different university lab facilities.

Industrial PhD Projects

The project contains three industrial PhD student projects. These are

- Mission-Critical Cloud - PhD student: Per Skarin, Ericsson Research; Academic Supervisor: Karl-Erik Årzén; Industrial Supervisor: Johan Eker, Ericsson

- Autonomous network resource management in disaggregated data centers - PhD student: Amir Roozbeh, Ericsson Research; Academic Supervisor: Dejan Kostic, KTH; Industrial Supervisor: Fethai Wuhib, Ericsson

- Autonomous learning camera systems in resource constrained environments - PhD student: Alexandre Martins, Axis; Academic Supervisor: Karl-Erik Årzén; Industrial Supervisor: Mikael Lindberg, Axis